Last Updated on August 10, 2023 by Mayank Dham

High Performance Computing (HPC) and supercomputers have emerged as a beacon of innovation and exploration in an era driven by data-intensive tasks and complex simulations. By providing unparalleled computational power, these technological marvels have revolutionized a wide range of domains, from scientific research to industrial advancements. This article delves into the world of high-performance computing and supercomputers, examining their significance, evolution, and remarkable impact on a variety of fields.

What is High Performance Computing?

High Performance Computing refers to the utilization of advanced computing techniques and systems to solve complex problems that require immense computational resources. Unlike conventional computers, which are designed for general-purpose tasks, HPC systems are engineered to handle enormous amounts of data, perform intricate simulations, and deliver results at remarkable speeds. HPC’s distinguishing feature lies in its capacity to achieve exceptional performance, often measured in terms of floating-point operations per second (FLOPS).

High Performance Computing (HPC) in the cloud

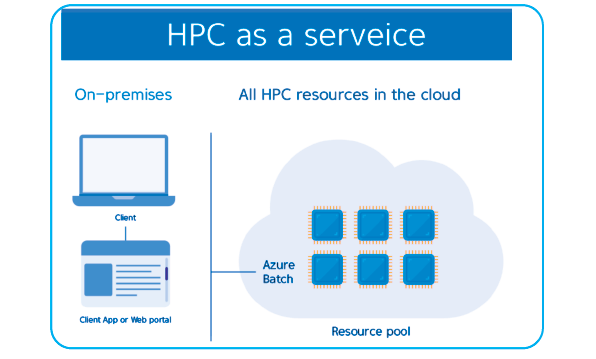

The compute resources needed to analyze big data and solve complex problems are expanding beyond the on-premise compute clusters in the datacenter that are typically associated with HPC and into the resources available from public cloud services.

-

Cloud adoption for HPC is central to the transition of workloads from an on-premise-only approach to one that is decoupled from specific infrastructure or location.

-

Cloud computing allows resources to be available on demand, which can be cost-effective and allow for greater flexibility to run HPC workloads.

The adoption of container technologies has also gained momentum in HPC. Containers are designed to be lightweight and enable flexibility with low levels of overhead—improving performance and cost. Containers also help to meet the requirements of many HPC applications, such as scalability, reliability, automation, and security.

The ability to package application code, its dependencies, and even user data, combined with the demand to simplify sharing of scientific research and findings with a global community across multiple locations, as well as the ability to migrate said applications into public or hybrid clouds, make containers very relevant for HPC environments.

By using containers to deploy HPC apps and workloads in the cloud, you are not tied to a specific HPC system or cloud provider.

What are SuperComputers?

At the apex of the HPC pyramid reside supercomputers, a class of computing machines that epitomize extreme computational prowess. These systems are built to handle tasks of unprecedented complexity, including climate modeling, molecular simulations, and high-resolution simulations of physical phenomena.

Evolution of Supercomputers

The origins of supercomputers can be traced back to the mid-twentieth century, when Seymour Cray developed the CDC 6600, widely regarded as the first supercomputer. Supercomputers have undergone a remarkable transformation since then, with exponential increases in processing power, memory capacity, and technological innovation.

The shift from single-processor to multiprocessor architectures, such as parallel computing and cluster computing, propelled supercomputers into new realms of performance. The advent of vector processing, which involves performing a single instruction on multiple data elements simultaneously, further augmented computational capabilities. In recent years, graphic processing units (GPUs) and field-programmable gate arrays (FPGAs) have been harnessed to achieve even greater parallelism, fostering the development of heterogeneous supercomputing architectures.

Applications and Impact of Supercomputers

Supercomputers have redefined the boundaries of scientific exploration and technological advancement. They play a pivotal role in diverse fields, including:

1. Scientific Research: From unraveling the mysteries of the universe through astrophysical simulations to understanding fundamental particles through high-energy physics experiments, supercomputers enable scientists to tackle questions that were once deemed unanswerable.

2. Climate Modeling: Addressing the challenges posed by climate change demands accurate and detailed climate models. Supercomputers facilitate high-resolution simulations that aid in predicting weather patterns, studying climate dynamics, and assessing the impact of human activities on the environment.

3. Drug Discovery and Biomedical Research: Supercomputers expedite drug discovery by simulating molecular interactions, predicting protein structures, and running simulations that help researchers understand complex biological processes.

4. Engineering and Industrial Applications: Industries leverage supercomputers to perform simulations for designing aircraft, automobiles, and other complex systems. These simulations enhance efficiency, reduce costs, and accelerate product development.

5. Energy Sector: Supercomputers assist in optimizing energy production, enhancing oil and gas exploration, and advancing renewable energy technologies like wind and solar power.

Challenges and Future Directions

Despite their monumental contributions, supercomputers face challenges related to power consumption, cooling solutions, and the growing complexity of software for parallel processing. As the demand for more computational power continues to surge, researchers are exploring avenues like quantum computing and neuromorphic computing to overcome these challenges.

Conclusion

Supercomputers and High Performance Computing are testaments to human ingenuity and technological prowess. They allow us to simulate, analyze, and comprehend phenomena that were previously beyond human comprehension. We can expect even greater breakthroughs in science, engineering, and countless other fields as these systems evolve, pushing the boundaries of what is possible and propelling humanity toward new horizons of knowledge and innovation.

Frequently Asked Questions (FAQs)

Here are some frequently asked questions about high performance computing and supercomputers.

1. What is high performance computing (HPC), and why is it important?

High performance computing (HPC) refers to the use of powerful computers or clusters of computers to solve complex problems that require significant computational resources. It is crucial for tackling large scale simulations, data analysis, scientific research, and engineering tasks that demand rapid processing of vast amounts of data.

2. What distinguishes a supercomputer from a regular computer?

A supercomputer is a type of computer that is exceptionally powerful and capable of performing computations at a much higher speed than typical computers. Supercomputers are designed for tasks requiring immense processing power, such as weather forecasting, nuclear simulations, and molecular modeling. They often feature specialized architectures, multiple processors, and advanced cooling systems to handle heavy workloads.

3. How are supercomputers ranked and measured for performance?

Supercomputers are ranked using benchmark tests that measure their processing speed, memory capacity, and interconnect performance. One widely recognized ranking is the TOP500 list, which lists the 500 most powerful computers globally. Performance is typically measured in FLOPS (floating point operations per second), and the Linpack benchmark is commonly used to assess a supercomputer’s performance on linear algebra tasks.

4. What are the key challenges in designing and operating supercomputers?

Designing and operating supercomputers present various challenges, including power consumption, heat dissipation, scalability, and programming complexity. Managing the energy consumption of these high performance machines is critical due to their substantial power requirements. Additionally, ensuring efficient cooling to prevent overheating is a major challenge, especially as the number of processors and cores increases.

5. How does parallel processing contribute to supercomputer performance?

Parallel processing is a technique where a task is divided into smaller subtasks that can be executed simultaneously by multiple processors or cores. Supercomputers heavily rely on parallel processing to achieve high performance. By distributing computational tasks across numerous processors, supercomputers can solve complex problems faster. However, effectively harnessing parallelism requires specialized programming techniques and tools.